prosocial dynamics lab

Prosociality is puzzling: prosocial individuals contribute to benefiting others, yet they must often incur a cost to do so. Why do such altruistic behaviors exist and are not outcompeted by selfish ones? And how to harness artificial intelligence applications to sustain prosociality within systems of artificial learning agents and humans? Solving the puzzle of prosociality is an essential endeavor to tackle some of the most pressing challenges that our society faces.

Beyond human groups, understanding prosocial behavior is fundamental in multiagent systems. In these systems, multiple computational agents, with a varying degree of autonomy, attempt to fulfill their goals while interacting with others. If agents can learn and adapt over time, it is important to understand how to design interaction rules and learning protocols that incentivize cooperation and guarantee satisfactory long-term rewards. Prosociality can here be measured as the probability of using a policy that leads to high collective benefits, even if sacrificing individual payoffs. Although systems of artificial agents can be directly designed to cooperate with others, the problem of designing prosocial systems remains under decentralized control, where each agent aims at independently maximizing long-term payoffs.

The problems of cooperation in multiagent systems and human societies are no longer independent. Humans co-exist with artificial agents, both in the physical world and on online platforms. The challenge of understanding human cooperation is today entangled with the challenge of designing artificial agent and algorithms that facilitate prosocial interactions both online and offline. Moreover, understanding human cooperation can provide invaluable knowledge on how to design artificial cooperation (and vice versa).

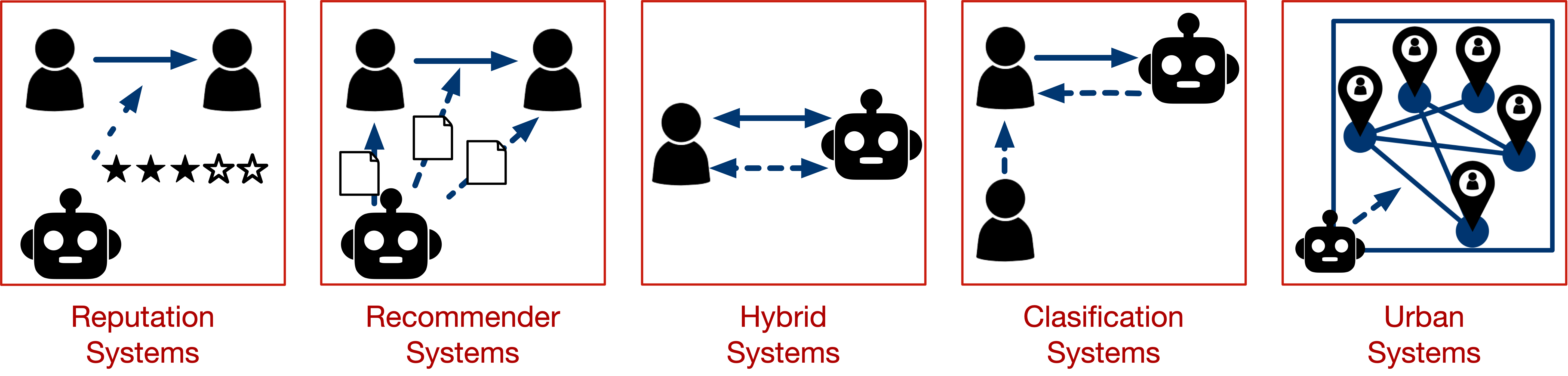

At the Prosocial Dynamics Lab, a new group at SIAS, we focus in five key decision-making domains where a combination of tools at the interface of AI, multiagent systems and population dynamics can improve our abilities to design increasingly prosocial systems: 1) reputation systems, 2) recommender systems, 3) hybrid systems, 4) classification systems and 5) urban systems. These five domains share commonalities: they constitute areas where understanding the interrelated dynamics of humans and agents’ behavior is essential; and they constitute domains where achieving socially desirable outcomes requires solving social dilemmas of cooperation, fairness and prosociality.

More details in a recent AI Magazine article.

(Prosocial Dynamics Lab, December 2024)